Well, there’s an entire AI tech stack behind the software solutions or applications, just like a hidden layer that powers all the functionalities. At the bottom of the AI stack is the data, which acts as a raw material for every AI application. Following that are algorithms that provide mathematical logic for processing the given data.

There’s more insight into what the AI tech stack is, and this article will help you learn most of it. Read along till the end for understanding the future of AI, and its implementation capabilities.

Understanding AI Technology Stack

In simple words, an AI stack is nothing but a comprehensive collection of frameworks, infrastructure components, and technologies that empower the use of AI systems. Using the dedicated tech stack, developers find it feasible to build a structure for the AI solutions. All the components are layered in a proper structure in order to support the AI lifecycle.

Just like usual technology stacks meant for software development, the AI stack also organizes the varying elements into working layers for collaborative execution. Thus, it facilitates efficiency and scalability with AI implementations. Such a layered approach is feasible in breaking down all the complex processes into small components, concerning the AI solution development.

Every layer within the AI technology stack represents a dedicated function. Whether it’s data handling or model deployment, the technologies are meant to allocate resources, address challenges, and identify dependencies. Such a modular view of all functions will enhance clarity, especially when you are working with a big team with shared responsibilities.

Key Components of an AI Stack

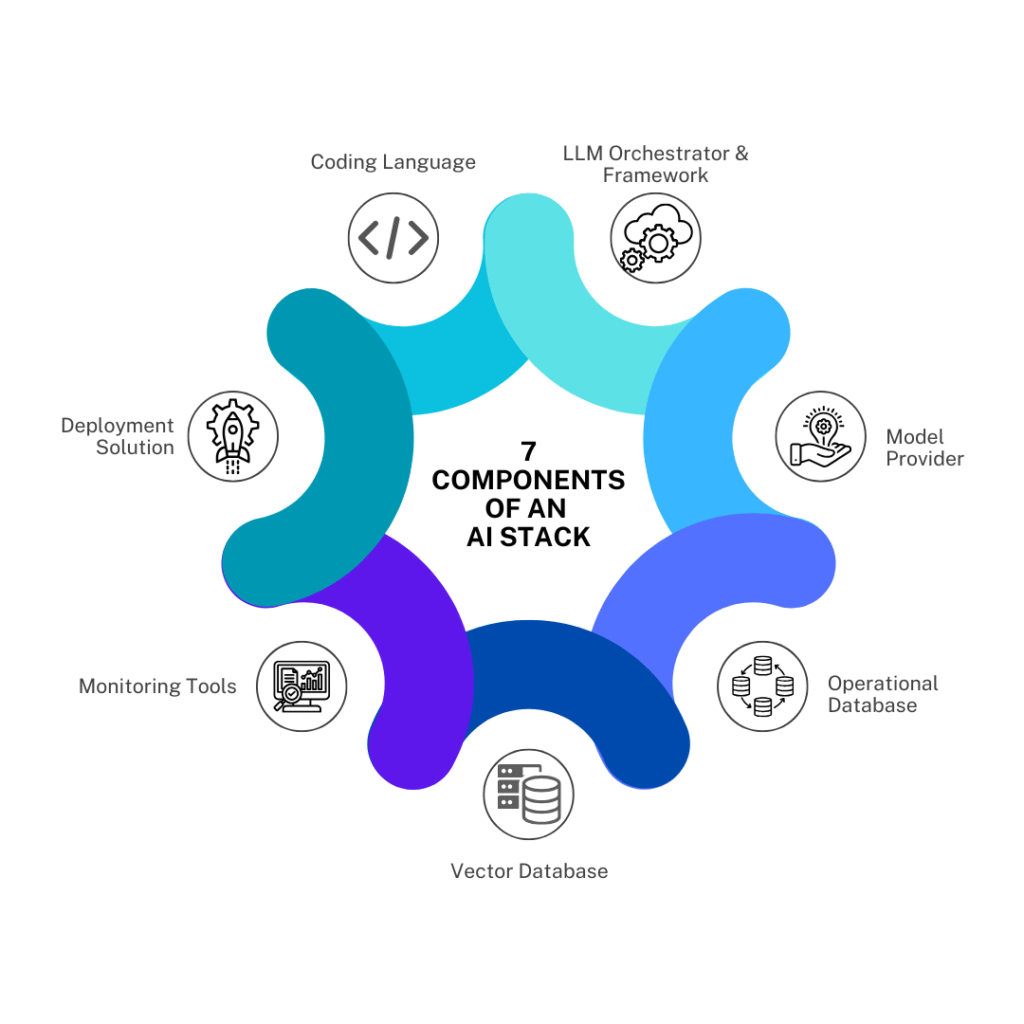

The key components that combine to form an AI stack are:

-

Coding Language:

It is the language that the developers use for curating the stack components, including the source and integration code of the AI application.

-

LLM Orchestrator & Framework:

It is the library that defines the complexities involved in integrating components of modern-day AI applications. Not only that, but it also provides different methods or integration packages. All operators within these components also offer tools for creating, modifying, and manipulating the prompts or conditioning the LLMs for diverse purposes.

-

Model Provider:

Organizations that offer access to the foundational models through inference endpoints or other valid means are termed model providers.

-

Operational Database:

It refers to a data storage infrastructure for operational or transactional data.

-

Vector Database:

It is the dedicated data storage solution for vector embeddings. All operators within this component will be providing features to store, manage, and search through the vector embeddings.

-

Monitoring Tools:

These are tools used for tracking the reliability and performance of an AI model. Furthermore, it will also offer alerts and analytics to improve the AI applications.

-

Deployment Solution:

These are services that streamline the deployment of the AI model by managing integration and scalability with the existing infrastructure.

What is an AI Layer?

A standard technology stack consists of an application, back-end, data, and operational layers. With the AI stack being utilized for modern-day applications, an additional AI layer is introduced within the array.

The AI layer introduces core intelligence across the standard technology stacks, and that happens through:

- Descriptive or generative capabilities within the application layer.

- Predictive analysis in the data layer.

- Process optimization & automation in the operational layer.

- Semantic routing in the back-end layer.

Dedicated Layers of an AI Stack

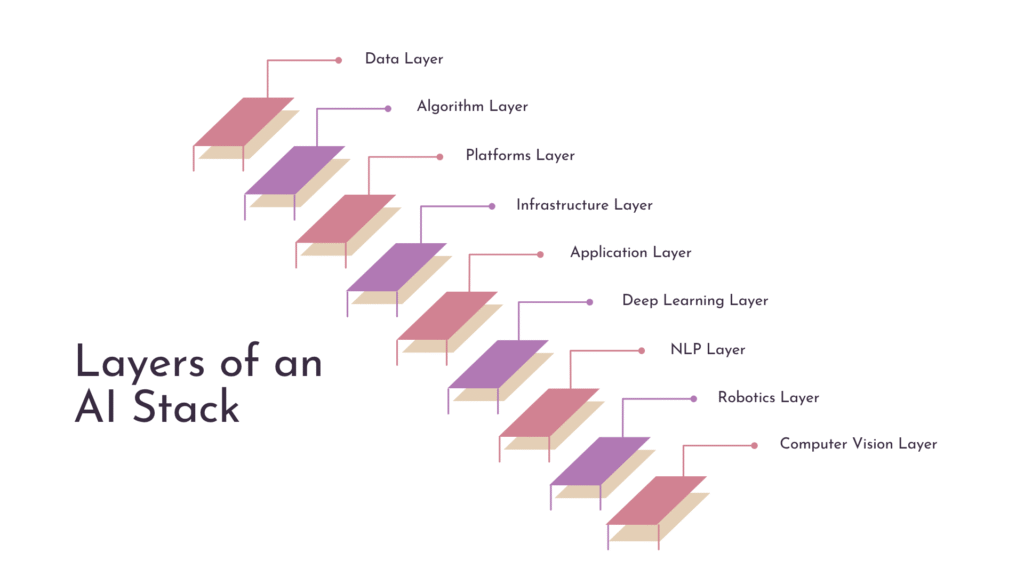

Developers working with an AI tech stack will come across these priority layers that are operationally different from those of the traditional stacks:

-

Data Layer:

Data is the foundation of any AI stack, as dedicated systems will require large amounts of data to train the ML models and make necessary decisions. This specific layer consists of data collection, preprocessing, cleaning, and storage.

1. Algorithm Layer:

This layer is responsible for creating mathematical models or algorithms for the subsequent AI system. Using the algorithms, an AI system can extract insights or patterns from the given data. Moreover, this layer also includes deep learning, machine learning, and other such statistical models.

2. Platforms Layer:

It provides developers with the necessary frameworks and tools for building, deploying, and maintaining AI applications. Beyond that, this layer also consists of various coding languages, frameworks, or libraries such as scikit-learn, PyTorch, or TensorFlow.

3. Infrastructure Layer:

This infrastructure layer is responsible for housing the software and hardware components that support the operation of an AI system. Here, you will find GPUs, CPUs, and other such specialized hardware. Not only that, but the infrastructure layer also has containerization and virtualization tools.

4. Application Layer:

The application layer of the AI stack houses the specific applications and use cases that leverage the potential of various AI technologies. For instance, this layer consists of recommendation systems, chatbots, autonomous vehicles, and other such AI systems.

5. Deep Learning Layer:

It is a subset of machine learning, involving the integration of artificial neural networks. The purpose of this layer is to enable your AI systems to learn from extensively larger data sets.

6. NLP Layer:

This layer is responsible for using models or algorithms for AI systems to make them understand and process human language.

7. Robotics Layer:

This layer supports the use of AI technologies for automating or controlling the physical machines built to run on artificial intelligence.

8. Computer Vision Layer:

It is the dedicated layer that makes use of models or algorithms for analyzing or interpreting visual data from videos or images.

How Does AI Stack Compare to the Traditional Tech Stack?

Aspect |

Traditional Tech Stack |

AI Stack |

Focus |

Here, the focus is mostly on the static systems built to operate with fixed inputs and outputs. | The AI stack is meant for adaptive systems that continually learn and evolve. |

Data Processing |

Data processing attributes are rule-based and pre-defined. | It features continuous learning with big datasets. |

Tools |

The traditional tech stack comes with standard coding frameworks. | There are AI-specific frameworks to fulfill the project needs, such as PyTorch and TensorFlow. |

Flexibility |

There is limited flexibility or adaptability with traditional tech stack. | AI stack is immensely flexible and scalable. |

Core Components |

Databases, basic tools, and servers. | ML models, advanced frameworks, and data pipelines. |

Use Cases |

The traditional tech stack is mostly used for web apps, reporting, and transaction processing. | AI stack is used for NLP, intelligent automation, and predictive analytics. |

Conclusion

Understanding what AI stack is and how it is driving the development of scalable models is the first step towards utilizing it for your business growth. Keep in mind that every layer of the stack has a role to play in adding value to your business applications.

Businesses that can successfully integrate AI capabilities into their systems or applications could increase their profitability by 38% in the next 10 years. Therefore, it becomes more crucial than ever for you to integrate AI into your business strategy.

Dive deeper into the capabilities of AI in software development, and be part of the emerging business trends!